In today’s rapidly evolving tech landscape, artificial intelligence is fundamentally changing how data centers operate. Traditional facilities designed for standard enterprise computing simply cannot handle the intense demands of modern AI workloads.

Consequently, data center operators are facing critical infrastructure decisions as they navigate this new computational territory.

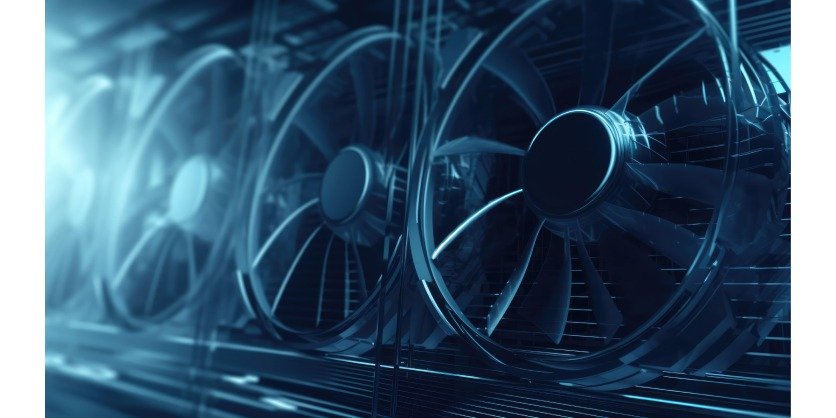

The AI-Driven Cooling Crisis

AI workloads generate unprecedented heat levels that push conventional cooling systems beyond their capabilities. Legacy air-cooled infrastructures, originally designed for modest 5- 10 kW racks, are now confronting AI applications that demand extreme power density, superior cooling efficiency, and enhanced structural support.

This dramatic shift is forcing operators to reconsider their entire facility design approach.

Many organizations are therefore at a crossroads: should they attempt to retrofit existing facilities or completely rebuild their infrastructure? This decision involves complex considerations around cost, operational continuity, and long-term scalability.

Moreover, making the wrong choice could result in significant financial waste or operational bottlenecks.

Understanding Modern Cooling Strategies Requirements

The heat generated by AI processing units presents unique challenges compared to traditional computing environments. Additionally, several factors make traditional cooling approaches increasingly inadequate:

- Power densities exceeding 50kW per rack (compared to traditional 5- 10kW)

- Concentrated heat generation in the GPU and specialized AI accelerator cores

- Continuous high-utilization workloads rather than variable computing patterns

- Higher operational temperatures that reduce component lifespan

Hence, organizations must develop comprehensive cooling strategies that address these specific challenges rather than simply scaling up conventional approaches.

Liquid Cooling: The New Standard

Among the most promising solutions for AI infrastructure is liquid cooling technology. Unlike traditional air cooling, liquid systems can:

- Handle significantly higher heat loads with greater efficiency

- Reduce overall energy consumption through better heat transfer properties

- Enable higher-density rack configurations

- Lower noise levels and reduce fan dependencies

Liquid cooling comes in several implementations, including direct-to-chip solutions, immersion cooling, and rear-door heat exchangers. Each approach offers distinct advantages depending on facility constraints and workload characteristics.

Direct-to-Chip Solutions

These systems deliver coolant directly to the hottest components, typically through cold plates attached to CPUs, GPUs, and other high-heat components. This targeted approach can efficiently manage extreme heat generation points without requiring facility-wide liquid infrastructure.

Immersion Cooling Systems

For the most demanding AI applications, immersion cooling submerges entire server components in non-conductive liquid coolant. This method provides superior thermal management but requires specialized equipment and maintenance protocols.

Retrofitting vs. Rebuilding Considerations

The transition to AI-capable infrastructure presents a fundamental question: Is it better to update existing facilities or build new ones? This decision requires careful analysis of several factors:

Structural Limitations

Many existing data centers weren’t designed with the floor loading capacity, ceiling height, or structural support needed for high-density AI racks and cooling infrastructure. Thus, physical building constraints often dictate what’s possible in retrofit scenarios.

Operational Continuity

Retrofitting while maintaining operations presents significant challenges. In contrast, building new facilities allows for smoother transitions but may require operating parallel infrastructure during migration periods.

Modular and Prefabricated Solutions

Prefabricated modular data centers offer a middle path between full rebuilds and complex retrofits. These standardized, factory-built solutions provide:

- Faster deployment timeframes

- Purpose-built designs for high-density computing

- Predictable costs and performance

- Scalable capacity that grows with demand

H3. Cost-Effective Cooling Strategies Implementation

Organizations must balance immediate capital expenditure against long-term operational savings. Although advanced cooling systems require a higher initial investment, they typically deliver substantial ongoing benefits:

- Reduced energy consumption (often 25-40% improvement)

- Lower maintenance costs through simplified cooling infrastructure

- Improved hardware lifespan through better thermal management

- Enhanced compute density that maximizes facility space utilization

Additionally, modern cooling systems often incorporate sophisticated monitoring and control systems that optimize performance based on real-time conditions.

Performance Enhancement Without Complete Rebuilds

For organizations that cannot immediately implement full-scale renovations, several incremental improvements can enhance cooling capabilities:

- Implementing hot/cold aisle containment to improve airflow efficiency

- Upgrading to more efficient CRAC/CRAH units with variable speed drives

- Deploying targeted liquid cooling for high-density racks while maintaining air cooling elsewhere

- Installing improved environmental monitoring systems to identify and address hotspots

These targeted enhancements can significantly improve cooling performance while organizations develop longer-term infrastructure strategies.

Future-Proofing AI Infrastructure

As AI workloads continue evolving, today’s cooling solutions must accommodate tomorrow’s requirements. Therefore, designing with flexibility and scalability becomes paramount for long-term success.

The most forward-thinking organizations are implementing hybrid approaches that combine multiple cooling technologies, creating environments that can adapt to changing computational demands. This flexibility ensures capital investments remain valuable even as technology advances.

Expert Editorial Comment

The AI revolution demands a fundamental rethinking of data center cooling approaches. Traditional systems simply cannot meet the extreme demands of modern AI workloads.

Organizations must therefore implement advanced cooling strategies that balance immediate operational needs with long-term growth capabilities.

Whether through retrofitting existing facilities or building new infrastructure, the transition to AI-optimized environments represents both a significant challenge and an opportunity to improve efficiency and performance.

By carefully evaluating cooling options and implementing appropriate solutions, organizations can create robust foundations for their AI computing future.